Infrastructures of Aina

The Aina project is coordinated by the Barcelona Supercomputing Center (BSC-CNS) , a pioneering center that is a reference in the field of supercomputing. The MareNostrum 5 supercomputer enables the processing of massive data and the execution of advanced and pioneering linguistic models in the sector.

The supercomputer has a capacity of 314 petaflops and is located in the area of the UPC-Campus Nord-Barcelona Tech. MareNostrum 5 has a pre-exascale power 100 times greater than MareNostrum 4. An advance that represents a great opportunity for the execution of language resources and technologies that require high performance.

Data management, a collective effort

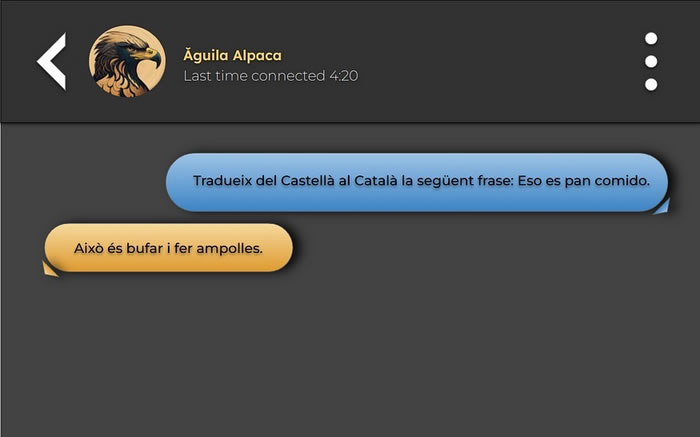

In the task of developing linguistic resources, Aina works with textual and voice data that it receives thanks to the collaboration of users and other available resources. In fact, the project works with entities from the linguistic community and other sectors in order to collect and process large amounts of data.

Language models are key to the development of new applications. Aina works on the generation and updating of these models , whether monolingual or multilingual or multimodal .

The project also helps implement and include Catalan modules and libraries in reference environments and platforms to guarantee the correct coverage of our language.

Join Common Voice and be part of the effort to position Catalan as one of the languages with the most resources available within the digital sphere.

Prominent organizations such as Google have inspired their linguistic models such as PaLM2 through the data collected in these repositories.

Construction of pioneering models

The generation of trained linguistic models is a fundamental step in the generation of applications based on artificial intelligence. Therefore, Aina’s goal is to train models that can represent progress in terms of security and response efficiency.

The generation of models known as large language models (LLM)

is a progressive process that allows exponential evolution in the creation of new models, reducing the cost and resources to train new optimal models. The Salamandra 7B model (available in 7B and 2B with instructed versions) is the large language model developed within the framework of the Aina Project and launched by researchers from the Language Technologies Unit of the Barcelona Supercomputing Center (BSC).

The design of multilingual LLMs allows to promote the artificial intelligence and natural language processing sector in Catalonia . Also to optimize processes and internationalize products, improve the service of public administrations , access to content in Catalan in the digital field and in the training sector by citizens , as well as promote the exchange of technology and knowledge by researchers in the scientific community.

Events