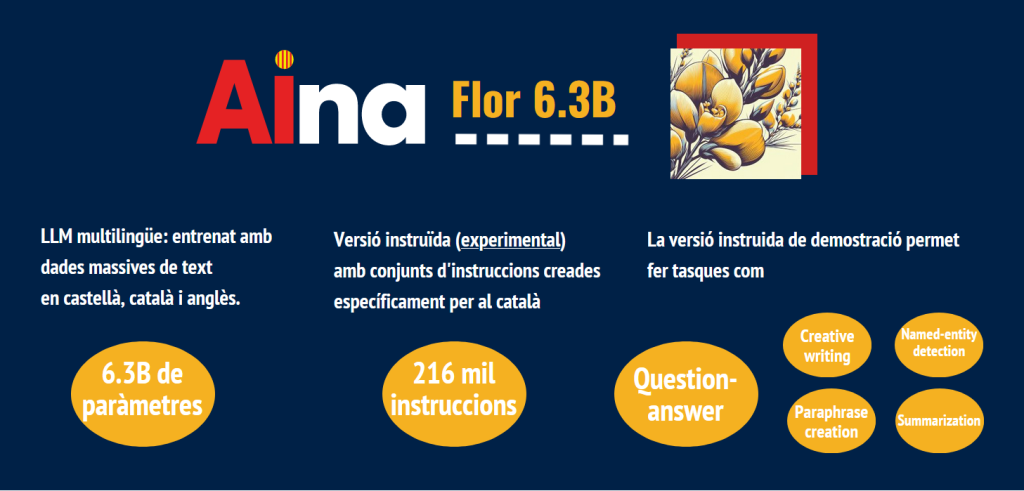

The team of the Language Technologies Unit of the Barcelona Supercomputing Center is making progress in the development of multilingual models and launching the new Flor-6.3B language model completely Open Source. This is the second Language Model (LLM) trained so far by the Unit, after Águila7B , in this case based on Bloom-7b and overtrained with a ‘corpus’ of 140 billion tokens in Catalan, Spanish and English. Aina presents the new LLM Flor model that has 6.3 billion parameters. Flor is a model that will allow advanced users and developers to explore the possibilities of this new artificial intelligence infrastructure designed based on the languages and needs of our environment. All in all, it will do so together with the instructions in Spanish and Catalan recently published by AINA. In addition, the model is prepared for text generation and through training oriented to specific tasks (fine-tunning).

Thanks to the compact nature of the models, they can be exploited and executed on desktop computers without the need for large infrastructures.

It is an approach that, according to Carlos Rodríguez , researcher at the language technologies unit of the BSC, “makes it possible to create models more closely linked to the language and the desired cultural conventions without having to start from scratch, since this would be a prohibitive cost”. The instructed experimental version of the model can be tested in the demo Spaces of the AINA project repository at Huggingface, and uses more than 240 thousand instructions in 3 languages to fine-tune its functionalities. These include making summaries, answering user questions, translation, document classification, ad hoc text generation, etc. It is important to clarify that this trial version is experimental. It has not yet been adapted to filter content that may be offensive, incorrect or inappropriate. All these language models that have been trained with online texts from the Internet involve these dangers.

Thanks to the compact nature of the models launched by the project, they can be exploited and executed on desktop computers without the need for large infrastructures. They are available at Hugging Face . However, Aina also works on massive models that require very high technical specifications. The launch of Mare Nostrum 5 will facilitate the training of these new linguistic models.

All the information on the model available on Medium :

Project Aina | Communication and press

press.languagetech@bsc.es